by GenericJam

Tags: python, elixir

I wrote the first pass of this while working at a company that was transitioning from mostly Python to mostly (eventually all) Elixir. There were many other people who were technical but not programmers. Many people didn’t really understand why one programming language could be better at something than another so I wrote a truncated version of this to try to explain to anyone who cared why we wanted to go with Elixir. Naming my bias here, I’m an unabashed Elixir shill and I think Elixir as a language is probably superior in every dimension that I care about. You can consider this an opinion piece. I do like to have my opinions based on fact so I will try to back up what I say.

This is mostly on the merits of the languages themselves. The libraries and overall ecosystem and job prospects are at least a couple of different posts, which I’m not overly qualified to deliver.

I hang out in some places like Elixir Discord where people come in with Python (or similar) understanding looking for help picking up Elixir but Elixir is difficult to grasp due to their Python (et al) exposure. Hopefully this can be useful as a transition guide for them.

Similarities

Dynamic types (with efforts to include static types)

Extensibility

Virtual Machine

Open Source

Benevolent Dictator for Life (van Rossum retired)

'Rapid' development

Differences

Python

Elixir

Extremely mutable

Extremely immutable with caveats

Zero isolation

Excellent isolation

Sharing by shared access

Sharing by copying

OOPish

Functional-lite

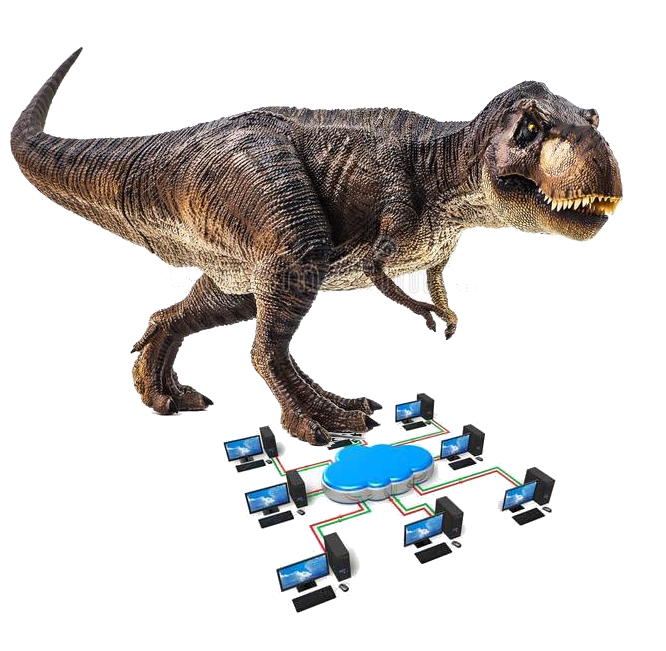

In a box

On a network

Easy globals

Globals are very difficult

Multi threaded with mutexes aka GIL

BEAM inherently multi threaded

Data races possible

Data races virtually impossible

Limited pattern matching recently added

Very powerful pattern matching inherited from Erlang

Looping via for loop and comprehensions

Looping via comprehensions, recursion and ‘functional’ helpers such as map and reduce

Concurrency with async/await and multithreading

Concurrency as a byproduct of BEAM behaviour - wait for 'free'

Interop is pretty limited

Interop options are plentiful

Anyone wondering why Elixir is better than Python for webservices and anything that might need multithreading, distribution or fault tolerance, these are all the reasons I choose Elixir.

Some BEAM background

There are some fundamental differences between the BEAM (Bogdan’s Erlang Abstract Machine) and most (all) other programming paradigms. The BEAM is the VM (virtual machine) that runs Erlang and Elixir. Erlang and the BEAM were built at the same time, one facilitating the other. Elixir is a rethink on Erlang with the benefit of hindsight and a couple of decades of other languages to draw inspiration from. The BEAM was designed to be distributed as a first principle. This was the top requirement. It was designed to control telephone switches which are inherently ‘outside the box’. It has to be able to operate on more than one machine at once. To facilitate this, different nodes of the BEAM can talk to each other seamlessly. One process doesn’t even know if another process it’s messaging is on the same node or not. So by the time you make it to using the BEAM you’re getting distribution thrown in on the ground level. Of course nothing is completely free, you still have to account for latency and the network, it’s not going to break physics after all. There’s nothing stopping you from using a single node, in fact that’s most common, but as soon as you need distribution it’s already there.

Got distribution?

If you’ve got distribution, you already have some resilience but you’ve also inherited some ambiguity. You have resilience if you’re running on more than one machine and one of them has a catastrophic accident due to a disgruntled coworker, your system is still running. However, you also have ambiguity because one machine can’t guarantee that another one is still there, it just knows it was there the last time it checked. It’s very difficult to embrace ambiguity and fault tolerance at the same time if you insist on something like try/catch/throw. Erlang embraces ‘let it crash’ in unforeseen circumstances. If the circumstances have been seen then you should change the code to accommodate it. However, instead of trying to catch every error, it’s a pretty simple strategy to just kill the process and restart in a fresh state.

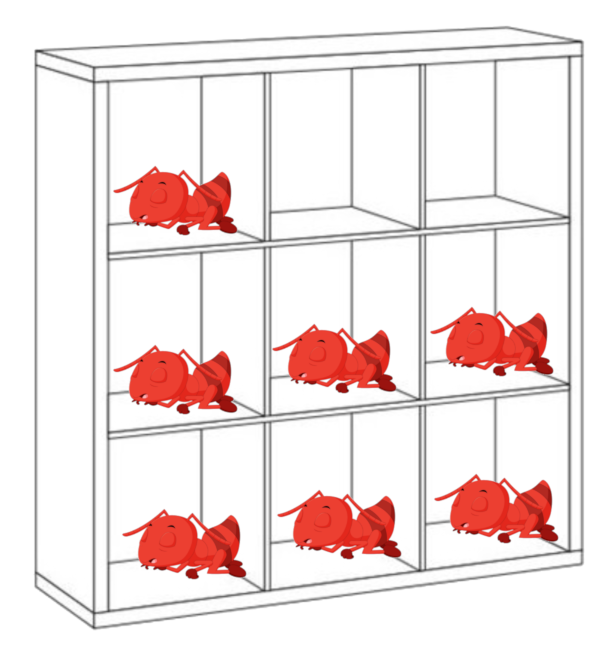

What is a BEAM process?

A process is similar to a system thread except the BEAM is the system, not the OS. Because the BEAM is making the rules it can make processes match the properties that are desirable for the BEAM. This means they can be very light weight. When they are created they start out at 326 words of memory and many never increase in size. When they’re dormant, and they’re dormant most of the time, they occupy only memory, so they take up very few resources.This is why the BEAM can scale to hundreds of thousands of processes on a single machine. It’s also why the BEAM is so good at network related tasks or similar things where there is lots of waiting. It waits so cheaply, you might as well call it free.

By the loosest definition, the BEAM has ‘green threads’ in that they are not directly mapped to system threads. The term of green threads arose out of the Java community after Erlang was already using lightweight processes. They are allocated entirely by the VM.

How does a process know when to be dormant?

I said a process is dormant most of the time. Let’s pretend a process wants to send a message to another process and then receive the reply. It could send the message and then immediately go into a receive block which is a bit like a switch (or case) statement where you’re waiting in real time for the variable in question to show up. While it’s waiting in this receive block, the process is put to sleep. When a message arrives for it, it is woken up to deal with it. Whatever is in the receive block is executed at this time.

There are three states that a live process can be in here.

-

It can be executing code right now. Shortly, it will be preemptively switched out and put in the back of the queue. Go to 2. If it runs into a

receiveblock which has no message, it gets put to sleep. Go to 3. -

It can be waiting in the schedulers’ queue(s) to execute. When it is first in the queue, go to 1.

-

It can be asleep if it is a

receiveblock. When it receives a message, go to 2.

The puppetmaster

In the internals of the BEAM it has a scheduler that tells which process to run when. The scheduler is pre-emptive so each process that currently has work to do is given a time slice. The processes that are waiting in receive are not given any time until they have a message to deal with. The number of schedulers is determined by the number of system threads allocated when the BEAM is started.

Why is the BEAM so great at concurrency?

Each process has its own dedicated memory that no other process can access. This prevents data races where two processes could be trying to access and modify the same piece of data at the same time. This can be a big problem in a no holds barred language like C which has no guardrails to stop you from doing this. In order for one process to get information to another one, the data has to be copied, not shared. You may find this inherently wasteful and it is until you consider you either pay this sort of price at the bottom by copying (or some other coordination strategy) or you pay it at the top with some set of coordination rules there. You have to pay for it somewhere. In the BEAM that cost is up front and already paid by the time you make it to the distributed stage. Internally, the BEAM has a few tricks where it will reuse memory within a process but from the programmer’s perspective the data is always immutable. If you want to change something you have to assign it to a new (or existing in Elixir, but not really, smoke, mirrors, nothing to see here) variable and as far as the programmer knows, everything gets copied across.

What about Python?

Oddly enough, Python also started out in a distributed environment, the Amoeba Operating System. I’m not aware of any vestigial remains from this period and it’s not clear to me whether Python had any awareness of distribution in that context or not. One of the goals of Python was to create a language that was easier to grasp for people who were technical but not programmers. The other language that was popular at the time that was also meant to be accessible was Basic but this still required computer domain knowledge and was unnecessarily fiddly.

Extensibility

Elixir and Python are both extensible which is part of the story of their adoption. Most Python extensions are written in C, whereas Elixir can be extended with Elixir macros or NIFs (Native Implemented Functions) which can be written in C, Rust, Zig, etc.

Parallelism

Elixir inherits parallel computing via the BEAM which has it from the ground up due to process isolation and running its own VM which includes schedulers.

Python had no reason to develop parallel computing until it kind of ran out of capacity with a single thread. There are also cases where you want to run a background thread to keep the UI thread responsive. Python isn’t typically used to run GUIs (graphical user interfaces) so it’s more that people want to harness more than one system thread to do more than one task at once. This behavior is a bit bolted on for Python given that it was only added later. This generally doesn’t work out that well.

Guido van Rossum, talking about how parallelism works in Python.

A bit of background about how memory works

In Python, it follows the example of C to try to make things fast. C uses pointers to point to a chunk of memory on the computer. This is the actual starting address of the physical location of memory as understood by the operating system. Python uses these pointers to point at a data structure which you can augment and manipulate. Presumably if it runs out of space it allocates a new chunk of memory but for the simple case you can always think of it as the same location. In Python most programmers think they’re dealing with a variable but they’ve actually got a pointer.

Houses vs Neighbourhoods

A reasonable metaphor of this pointer is something like a house address. You get the whole house to put whatever you want in it. It’s hard to pass a house around so instead you pass the house address around and if someone wants what’s in the house they go get it or they leave something behind or pick something up and leave something behind.

This works totally fine if you’re in ‘I am Legend’ and you’re literally the only human capable of altering the contents of the house. The problem is when more than one person can access the house at the same time maybe when you come back it’s been altered by someone else but seeing as this is a computer and not a human being it’s too stupid to know it’s been modified without its knowledge so it assumes the state of the house is the same way as it left it the last time.

As a result, you have two threads or processes competing for access to the same memory location. Imagine the plot twist that Will Smith isn’t the only uninfected human but he has no reason to suspect the other normal human is in the house with him at the same time and they just by happenstance don’t stumble into each other but they are modifying the contents of the house which puzzles the other person who starts to think they might be going crazy. (Also, you’ve got a scheduler which complicates things further because any of the processes or threads can be interrupted and told to drop what they’re doing so another thread gets computing time too. This doesn’t fit well into the metaphor so let’s pretend it doesn’t exist for now.)

In Python and other languages, they have ‘locks’, in Python it’s called the Global Interpreter Lock or GIL. Coming from Erlang/Elixir, if you ever see ‘global’ or ‘lock’ you know you’ve done something wrong. This lock is a way to say only you get to have access to something until you release the lock. It’s like you’ve stuck your own padlock on the house which means you know only you can access it.

This gets very problematic because that other process is waiting for you to take your padlock off because they need what’s in the house. Maybe what they need isn’t even related to the reason you padlocked the house. It gets even worse when you arrive at another house that this other process has padlocked and you’re waiting for them to remove their lock and they’re waiting for you to remove your lock. This is when two processes or threads get deadlocked.

In Erlang/Elixir using this metaphor, each process has its own neighbourhood and is not allowed to enter another process’ neighbourhood. It has access to all the houses in its neighbourhood but if it wants to communicate with another neighbourhood it has to send a copy of whatever it wants to send there. So once again, kind of stretching the metaphor but in this universe, everyone has a 3d printer capable of printing anything. It sends the copy in a delivery van. In the Erlang/Elixir world this is called message passing. Conflicts are impossible because all of the processes are completely isolated. There will never be a deadlock and the processes just act in real time and don’t have to wait for another process to let go of a resource in order to access it. Now your neighbourhoods can be located in other cities and or planets and the system scales quite easily.

Of course, there is a cost. You have to copy all the information sent from one process to another. In Python, it can just share access to the location but in Elixir it has to pay the cost of copying the information to share it. There are some situations where sharing the information will be more performant. The JVM (Java Virtual Machine) does this very well. It starts to run into a lot of problems if you want to run with more and more cores (parallel) or you want to have a system running on more that one machine (distributed). Elixir scales very well but the other model based on sharing the memory location runs into very serious difficulties when trying to scale due to locks, etc. if one of the processes wants to write. If both are merely reading, it’s probably safe.

Another really wonderful side benefit of having parallelism and distribution built in from the beginning is the ease of using this programming model. In Elixir, I just think as if the process is always going forward and if it has to wait, that’s totally fine, the VM (BEAM) takes care of that for me. In order to accommodate this ‘waiting’ period in most other languages, they rely on callbacks which can be really difficult to think about and are a huge source of bugs.

I wrote more about this topic of this superior thought process model here.

Immutability

Immutability is having a bit of a moment right now with some immutable features being sprinkled into various languages and frameworks. However, the BEAM has immutability all the time. This can be a bit difficult for newcomers to understand sometimes because how else do you update things without putting a new value there? Once you grasp the power of immutability, it’s difficult to go back.

# Elixir immutability

counter = 0

# Result:

0

# Elixir says no

counter++

# Elixir still says no

counter += 1

# Result:

** (SyntaxError)

# Access to original `counter` is lost as `counter` is rebound

counter = counter + 1

# Result:

1

foo= %{yo: "dog"}

# Result:

%{yo: "dog"}

# No update can occur unless rebound or assigned - this doesn't get stored anywhere

Map.update(foo, :yo, "", fn _ -> "cat" end)

foo

# Result:

%{yo: "dog"}

# Once it's rebound, then the update actually works

foo = Map.update(foo, :yo, "", fn _ -> "cat" end)

foo

# Result:

%{yo: "cat"}Testing in Elixir is pretty straightforward. You have a basic version of a variable at the beginning of a test. You send it through your function(s) and receive the returned result. Compare it to the original. Does it look different in the way you expect? If so, pass, if no, fail.

I was used to this pattern already and I wrote a test in Python. It kept failing and it took me a while to understand why. The reason was that even if you assign a variable to another variable, it doesn’t create a copy unless you explicitly tell it to. It just maps the existing memory space to the new variable so at the end you’re comparing the exact same chunk of memory to itself so obviously there’s no difference.

Problem solving tends to be much more straightforward with immutability. It puts some very easy to follow guardrails in place. Less freedom in this case is a good thing. You should restrain yourself from being able to make mistakes if possible. Initially, immutability feels like a straightjacket but with time you may learn to see it as a guidance system that is always taking you in the right direction. It protects you from yourself and other programmers.

Python has a rather extreme version of mutability. You can drop a variable into a function, that function does who knows what to it and returns nothing but after that function the variable has been modified. Python has effectively no isolation because it shares the memory by default. In this regard it is the polar opposite of Elixir. The only way to address this in Python is just to have rules on your programming team about how these variables can be treated such as always return the variables from a function and reassign them where the function is called. Relying on programmers to adhere to these rules smacks a bit of dangling the baby over the volcano and hoping the rules save you. I can’t see how this isn’t a continual fountain of bugs.

# Python examples

counter = 0

# Mutating in place is no problem

counter+=1

# Result:

1

# First example it creates a genuine copy

counter = 0

counter+=1

print(["counter", counter])

# Result;

['counter', 1]

counter2 = counter

counter2 += 1

print(["counter", counter])

print(["counter2", counter2])

# Result;

['counter', 1]

['counter2', 2]

# Second example it creates a copy of the reference so the new reference will update the original memory location

foo = {'yo': 'dog'}

print(['foo', foo])

# Result;

['foo', {'yo': 'dog'}]

foo2 = foo

foo2["yo"] = 'cat'

print(['foo', foo])

# Result;

['foo', {'yo': 'cat'}]

# This function can modify the contents of the original location, despite not returning anything

# No isolation whatsoever

def it_wasnt_me(val):

val["yo"] = 'bunny'

it_wasnt_me(foo)

print(['foo', foo])

# Result;

['foo', {'yo': 'bunny'}]

Pattern matching

Erlang and Elixir have a very powerful pattern matching system that is built into many aspects of the language. Even an ‘assignment’ in Elixir is actually a pattern match to a wide open variable. It succeeds so it stays. Here are some simple examples.

# Oh look a completely unoccupied variable - match succeeds

knock_knock = %{whos_there: "Nobel", who: "Nobel…that’s why I knocked!"}

# Result:

%{who: "Nobel…that’s why I knocked!", whos_there: "Nobel"}

# Rebinding of knock_knock to new memory location - match succeeds

knock_knock = %{whos_there: "Figs", who: "Figs the doorbell, it’s not working!"}

# Result:

%{who: "Figs the doorbell, it’s not working!", whos_there: "Figs"}

# Match error as it's been rebound the pin (^) operator prevents rebinding to force a match on the existing variable

^knock_knock = %{whos_there: "Nobel", who: "Nobel…that’s why I knocked!"}

# Result:

** (MatchError) no match of right hand side value: %{who: "Nobel…that’s why I knocked!", whos_there: "Nobel"}

(stdlib 4.0) erl_eval.erl:496: :erl_eval.expr/6

#cell:5h54bvryhvguhai7j52aabpz3orsyyzq:1: (file)

# Full match succeeds

%{whos_there: "Figs", who: "Figs the doorbell, it’s not working!"} = knock_knock

# Result:

%{who: "Figs the doorbell, it’s not working!", whos_there: "Figs"}

# Partial match succeeds

%{whos_there: "Figs"} = knock_knock

# Result:

%{who: "Figs the doorbell, it’s not working!", whos_there: "Figs"}

# Partial match with destructuring to assign to unmatched variable

%{whos_there: name} = knock_knock

# Result:

%{who: "Figs the doorbell, it’s not working!", whos_there: "Figs"}

name

# Result:

"Figs"

# Partial match with existing pinned variable fails to match

name = "Nobel"

%{whos_there: ^name} = knock_knock

# Result:

** (MatchError) no match of right hand side value: %{who: "Figs the doorbell, it’s not working!", whos_there: "Figs"}

(stdlib 4.0) erl_eval.erl:496: :erl_eval.expr/6

#cell:2xjtlcz2qzhjarmwraqk22bqeygwm5uc:3: (file)

# Match on partial string

"Fi" <> rest = name

# Result:

"Figs"

rest

# Result:

"gs"

# Match on list - '_' tells compiler to discard

[_, 2, third] = [1, 2, 3]

# Result:

[1, 2, 3]

third

# Result:

3

# Match on partial list

[_, 2, ^third | tail] = [1, 2, 3, 4, 5]

# Result:

[1, 2, 3, 4, 5]

tail

# Result:

[4, 5]

# Pattern match in case

answer =

case knock_knock do

%{whos_there: "Nobel"} -> "No problem"

%{whos_there: "Figs"} -> "Figs it yourself"

# The last one catches everything else

_ -> "Hello stranger"

end

# Result:

"Figs it yourself"Pattern matching is also how what might be called ‘function overloading’ in other languages is achieved. Functions are defined in Elixir by how many arguments they take, known on the BEAM as ‘arity’. Beyond that, each pattern match establishes a different clause of the same function so it’s a bit like a switch (case) statement combined with a function head. Pattern matching in function heads works the same as it would in a case with each of the clauses becoming less specific top to bottom.

defmodule Joke do

# Pattern match in function head

def talk_back(%{whos_there: "Nobel"}) do

"The JWs wore it out!"

end

def talk_back(%{whos_there: "Figs"}) do

"Come back when you're dates!"

end

def talk_back(%{whos_there: name}) do

"I've never heard of you, #{name}!"

end

end

Joke.talk_back(knock_knock)

# Result:

"Come back when you're dates!"Python has recently introduced pattern matching but is very late to the party and only emulates the behaviour of an Elixir case statement. It’s not really in the same class of the very powerful pattern matching system in Elixir.

Syntax

Syntactically, Python and Elixir are reasonably similar with one noted difference. Python, rather controversially, uses syntactically significant whitespace to denote a code block based on the number of common indentations. This is a loaded foot gun waiting to blow your big toe off. Elixir generally uses do and end keywords to define the beginning and end of a code block which is generally easier to follow for the programmer and the compiler. Personally, I’m in the curly brace camp (C, Java, JS, … almost every other sane language) but I can deal with keywords opening and closing. Explicit-ish block open and implicit close is painful in so many ways and also a source of bugs due to basically enforced looping in Python.

Looping

Having to cycle through a list or some other list-like structure like a map / dictionary is a pretty common thing in many programming languages. These can become deeply nested structures which invites you to have loops within loops. The answer to bring some clarity and sanity to this situation in Elixir is to have separation into functions.

The same task in Python and Elixir. These examples are meant to be representative of something that a mid level user would write. It’s of course subjective, but how does the language ‘want’ you to write something? A more advanced user of Python would use a list comprehension here, alhough the nested structure is still necessary. A savvy Elixir user could use a comprehension or one or more specialty functions from Enum or recursion. Elixir is incredibly flexible with regards to data transformation and extraction.

Python

import requests

import json

response = requests.get("https://dummyjson.com/carts")

data = response.text

json_data = json.loads(data)

product={}

for key, value in json_data.items():

if key == "carts":

for cart in value:

for cart_product in cart["products"]:

if cart_product['title'] == 'Stiched Kurta plus trouser':

print("Found it!")

product=cart_product

print(product)

# Result:

Found it!

{

'id': 42,

'title': 'Stiched Kurta plus trouser',

'price': 80,

'quantity': 2,

'total': 160,

'discountPercentage': 15.37,

'discountedPrice': 135

}Elixir

Finch.start_link(name: MyFinch)

{:ok, resp} = Finch.build(:get, "https://dummyjson.com/carts") |> Finch.request(MyFinch)

%{body: body} = resp

json_data = Jason.decode!(body)

%{"carts" => carts} = json_data

found_product =

carts

|> Enum.reduce(%{}, fn

%{"products" => products}, acc ->

products

|> Enum.reduce(acc, fn

%{"title" => "Stiched Kurta plus trouser"} = product, _acc1 ->

IO.puts("Found it!")

product

_, acc1 ->

# Ignore

acc1

end)

_, acc ->

# Ignore

acc

end)

IO.inspect(found_product)

# Result:

"Found it!"

%{

"discountPercentage" => 15.37,

"discountedPrice" => 135,

"id" => 42,

"price" => 80,

"quantity" => 2,

"title" => "Stiched Kurta plus trouser",

"total" => 160

}The two examples are remarkably similar in line length but you may notice Python uses five indentations to Elxir’s two. Python is nested three deep and Elixir is only two.

Simplicity?

One of the goals of Python was to reduce the barrier to entry for nonprogrammers which I think it has successfully done. However, it’s definitely showing its age. It’s kind of trying to cater to both ends of the spectrum: the complete newb as well as the advanced user that’s building and running machine learning models with it. The more advanced users will be the driving force here so I suspect the language is becoming less accessible to new users as they are not driving its development. It necessarily needs to become more complicated to serve the needs of the more advanced users of the community. It suffers from both ends as some ‘features’ such as using spaces to define code blocks which is supposed to be ‘user friendly’ becomes a serious thorn in the side of advanced users.

Elixir also has the goal of a lower barrier to entry but without having to sacrifice power. The language inherits its syntactic flavour from Ruby, a language well loved for its syntax. Elixir also is benefitting from the power of hindsight as a relatively new language with more examples of what worked and didn’t work. Also, as it is a retake on Erlang, it has the example to see what has aged well there and what hasn’t.

To say that Elixir is ‘simple’ is an overstatement but I do think it is as simple as it can be given the power, utility and flexibility it unlocks. Supervisors, Registries, GenServers, Agents and general interprocess communication are not simple per se but are as simply as they can be given the roles they serve. The whole idea of communicating effectively between threads is not a well trod path in most languages so this is a lot to take in for a newcomer. However, once you have these on board, it unlocks the capabilities of the BEAM.

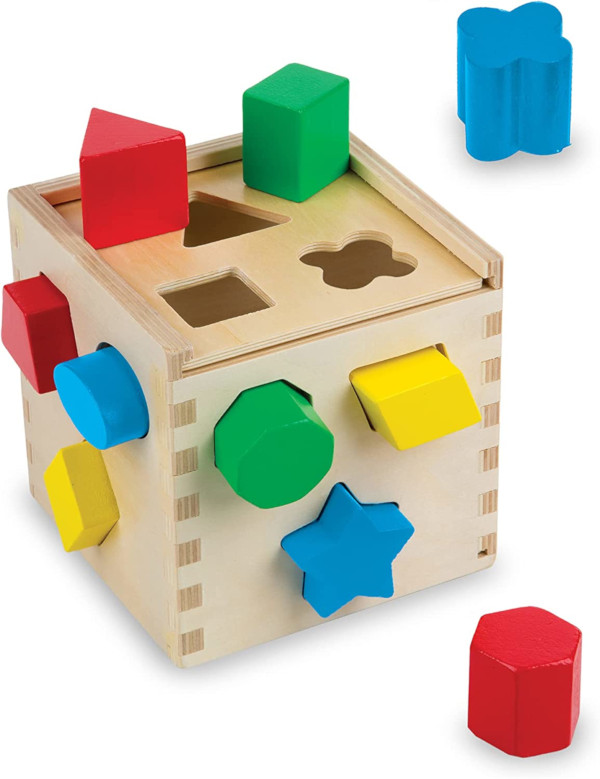

Functional Programming

One of the reasons why Elixir should be easier to learn by someone that has done high school math is that functions in Elixir and math feel the same and immutable variables have the same properties. One of the definitions of a mathematical function is that the same inputs create the same outputs. This is the case for pure functional programming. These ‘extra bits’ that functions can throw off are called ‘side effects’. Some more pure functional languages such as Haskell try to push the side effects to the edges which can be a useful thing to do. However, side effects are the thing that gets your program in touch with the rest of the world so they are somewhat inevitable.

In the case of Elixir, reasons for side effects include sending and receiving messages, file or user I/O (input output), among other things. Apart from a handful of actions that are ‘effectful’, side effects are minimal. Once again, this is due to process isolation. One process can’t reach into another’s memory and modify anything so effects are minimized and the functions are mostly pure so it stays mostly true to its mathematical cousin.

Variables cannot be reassigned once they have been assigned just like in math. (Elixir caveats, smoke, mirrors, rabbits in and out of hats but technically they’re not reassigned, they are ‘rebound’, there’s just some below the stage swapsies to avoid forced variable enumeration like is resorted to in Erlang. Trust me, it’s better this way and it’s not actually that confusing once you grok it.) When you’re solving for a variable in algebra you might not know what it is but you know it’s not going to change and if it were to change midway the whole thing becomes pointlessly shifting sand.

In most programming languages, a variable is merely a label you stick on a value to tag it. You can take that label and put it on something else no problem. So the variable is whatever you say it is. Sometimes, especially in Python this is being changed beneath your feet without you being aware. If you pass off a variable to another function it can do whatever it wants to that memory space.

Functional programming in Python is very underdeveloped. Van Rossum has stated he doesn’t want to put in support for functional style programming.

In math, one function can use another. In programming the same is true. In functional programming, a function can call itself, typically until some condition is met. Every time a function is called, it puts another frame on the stack, so it adds more to the running memory of your program. If you have a function that wants to run 100,000 times before it quits, that’s going to run out of memory. Most languages that have support for ‘functional’ have some strategy of dropping frames. The most common being tail call optimisation (TCO) which doesn’t add a frame if the very last thing that’s done is the function calling itself. Python lacks any such optimisation so you have to be very careful if you want to deal with nested structures using some type of recursion. You can accidentally run yourself out of memory if the structure you are iterating through is too long or deeply nested.

Paradigms

Both are multi paradigm to some extent. Python started out as a scripting language, which arguably is still its forte. At some point people started building more complex things with it so they needed to bring in more structure. Object Oriented Programming (OOP) was the hotness at the time so it was ‘obvious’ it needed classes to bag the functions and variables together. So Python is multi paradigm but you are still coerced into using the paradigms you don’t necessarily want to use because that’s the only way. If you’re writing something large and complex, you pretty much have to use OOP as that’s the only sane mechanism to use organisationally especially if you’re using a framework or library that enforces the paradigm.

Elixir could also be considered multi paradigm. It is ‘functional’ but not super strictly. It is ‘actor model’ friendly by a certain interpretation of actors. If that’s your take, there are actors in your system but not everything within the system is an actor. Actors most closely line up with GenServers but some people may also include processes in that group too. It depends on how heavily you squint. You can even take on OOP to some extent in Elixir. It certainly gives you the freedom to do so if that organizational style floats your fleet.

The general feel of an Elixir system is that of a smart pipeline that accepts data at one end, it flows through, gets reshaped and gets sent out the other side, transformed. For the most part this bucks the OOP trend of storing the data in objects.

Types

Elixir and Python are both dynamically typed with static typing knocking at the door. Python has been implementing types more and more and Elixir has various gradual type schemes available. Both systems are not really built into the language but are more of a gloss that are interpreted by some sort of a first pass compiler.

As near as I can tell, Python doesn’t have static types because van Rossum didn’t want to burden the users with the inconvenience. This is a reasonable strategy for a scripting language, but the experiment has crawled out of that particular petri dish so at the scales that Python can be used at, types are a very useful sanity device, especially given the lack of Python’s guardrails.

The main reason Elixir doesn’t have static types is because Erlang didn’t have them and that gets a bit into the weeds. One of the initial hard requirements for Erlang was to be able to have hot code upgrades. The code is replaced while it is running. This is a bit like swapping out the gas pedal in your car while you’re driving. They couldn’t figure out how to do hot code swaps and enforce static types so that was left on the cutting room floor. It made the system less stable and stability was valued over types and performance.

Concurrency

Concurrency in Python is a complex and patchy hot mess.

“Code written in the async/await style looks like regular synchronous code but works very differently. To understand how it works, one should be familiar with many non-trivial concepts including concurrency, parallelism, event loops, I/O multiplexing, asynchrony, cooperative multitasking and coroutines. Python’s implementation of async/await adds even more concepts to this list: generators, generator-based coroutines, native coroutines, yield and yield from. Because of this complexity, many Python programmers that use async/await do not realize how it actually works.” - tenthousandmeters.com

Elixir is simple once you understand the primitives of send and receive. The BEAM does the coordination for you. The primitive receive is actually kind of inconvenient to deal with on its own as it is a blocking operation. The way to deal with this is to put it in a recursive function that processes the receive and then calls itself again and receives and processes the next message if there is one in the message queue. If there isn’t one, it stops at the receive and is made dormant. Using this simple system it’s possible for a GenServer to maintain the illusion of being ‘always on’ and ‘always ready’ despite itself being a single process. For more on this, check out this great post on deconstructing GenServers.

Interop

Python and the BEAM get along great but the BEAM is making all the effort.

The options for interacting with Python are pretty limited to things like HTTP or the command line or conceptually one could extend Python to interact with their system, although I’ve never heard of this being done. It’s safe to say the most obvious way is HTTP like many microservices will use.

The options for interacting with Elixir are all of the above plus Ports and NIFs, If you really wanted to, you could natively write an Erlang node in your language of choice.

Ecosystem and Community

In many ways the Python community is a model of what became the Elixir community. Both are generally welcoming and friendly.

The size is less comparable as Python has been rolling for a lot longer. It started to hit its stride in the early 2000s. The size of the help corpus is enormous with many conflicting answers a mere Google search away. The documentation is generally quite good. One of the best reasons to go with Python is that with the wealth of libraries, your job may already be mostly done.

By comparison, the Elixir libraries available are much fewer in number. There is often only one good choice. For example if you want a web framework, it’s pretty much Phoenix or roll your own. Whereas Python has at least Django and Flask as well as several minor ones.

That being said, due to its ease of use, you often don’t need a library or you can write your own simple lib and share it with the community. Most people in any language would rather write ten or twenty lines to avoid the external dependency. This is very often perfectly feasible.

In some areas, Python is clearly years ahead in library development, such as the embarrassment of riches in Pandas, NumPy, SciPy, nlptk, OpenCV as well as all the specialty tooling built around machine learning. This is easily a blog post on its own. If your goal was to quickly set up a machine learning model as a proof of concept, Python would probably be the better choice as it stands today.

Elixir has been able to benefit from seeing what other communities have had to find out the hard way such as breaking changes in the language (Python 2.x v 3.x), the importance of package management, version management. etc. The older communities still bear the scars of this development but the newer languages can just skip to the solution.

I have had it with these mother lovin snakes on this mother lovin plane!

Once again, I am of the Elixir converted, trying to make more converts. In terms of basic capabilities, Elixir outshines on almost all. It’s possible in some single threaded applications, Python could be faster but for the most part the types of jobs Python is called upon to do, particularly in the distributed or async space, Elixir can and will do them better on average.

Elixir is rapidly catching up in most of the areas that Python is still a good choice for. Livebook is a similar tool to Jupyter notebooks but it has some super powers because it’s Elixir that Jupyter will neve have. Nx, Axon, etc are making huge inroads into the heart of Python’s machine learning offering.

If you’re writing a short script, by all means Python is a perfectly adequate solution. If you are writing a reasonably complex application, it is likely going to cause you issues and Elixir (as if those are the only two languages in the world) is likely a better choice. If there is any chance you want to take this thing and scale it, hands down Elixir should be your main consideration.

There are other reasons to choose Python such as the support available in data science and machine learning, not to mention the talent pool here is going to be already into Python. That being said, Elixir is rapidly catching up and for some applications is this field is already a better choice.

Personally, I’m very interested in pursuing Elixir to the bounds of its possibilities and if need be, I’ll hold my nose and use Python if that’s the way it has to be. Currently, my greatest interest in Python is to understand it well enough to transcribe code out of it and into Elixir.

Thanks to Jason Steving, the illustrious creator of Claro and Nicholas Geraedts for providing some very necessary sanity checking and push back.